Last week, Apple previewed quite a few updates meant to beef up baby security options on its gadgets. Among them: a brand new expertise that may scan the pictures on customers’ gadgets as a way to detect baby sexual abuse materials (CSAM). Though the change was extensively praised by some lawmakers and baby security advocates, it prompted fast pushback from many safety and privateness specialists, who say the replace quantities to Apple strolling again its dedication to placing person privateness above all else.

Apple has disputed that characterization, saying that its strategy balances each privateness and the necessity to do extra to guard kids by stopping among the most abhorrent content material from spreading extra extensively.

What did Apple announce?

Apple introduced three separate updates, all of which fall below the umbrella of “child safety.” The most important — and the one which’s gotten the majority of the eye — is a function that can scan iCloud Photos for recognized CSAM. The function, which is constructed into iCloud Photos, compares a person’s pictures towards a database of beforehand recognized materials. If a sure variety of these photos is detected, it triggers a evaluation course of. If the photographs are verified by human reviewers, Apple will droop that iCloud account and report it to the National Center for Missing and Exploited Children (NCMEC).

Apple additionally previewed new “communication safety” options for the Messages app. That replace permits the Messages app to detect when sexually specific pictures are despatched or obtained by kids. Importantly, this function is simply obtainable for kids who’re a part of a household account, and it’s as much as mother and father to choose in.

Apple

If mother and father do choose into the function, they are going to be alerted if a toddler below the age of 13 views one among these pictures. For kids older than 13, the Messages app will present a warning upon receiving an specific picture, however gained’t alert their mother and father. Though the function is a part of the Messages app, and separate from the CSAM detection, Apple has famous that the function may nonetheless play a job in stopping baby exploitation, because it may disrupt predatory messages.

Finally, Apple is updating Siri and its search capabilities in order that it might probably “intervene” in queries about CSAM. If somebody asks methods to report abuse materials, for instance, Siri will present hyperlinks to assets to take action. If it detects that somebody is perhaps looking for CSAM, it should show a warning and floor assets to offer assist.

When is that this taking place and might you decide out?

The adjustments might be a part of iOS 15, which is able to roll out later this 12 months. Users can successfully choose out by disabling iCloud Photos (directions for doing so might be discovered ). However, anybody disabling iCloud Photos ought to remember that it may have an effect on your means to entry pictures throughout a number of gadgets.

So how does this picture scanning work?

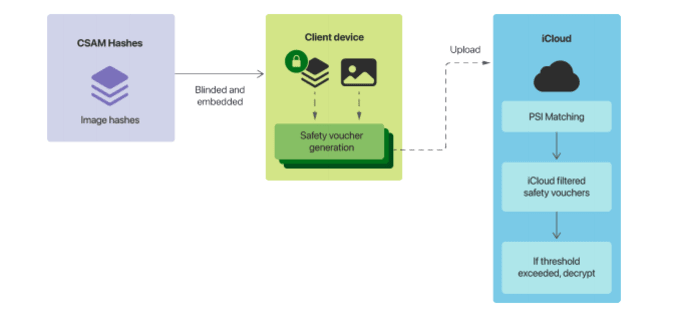

Apple is much from the one firm that scans pictures to search for CSAM. Apple’s strategy to doing so, nonetheless, is exclusive. The CSAM detection depends on a database of recognized materials, maintained by NCMEC and different security organizations. These photos are “hashed” (Apple’s official identify for that is NeuralHash) — a course of that converts photos to a numerical code that enables them to be recognized, even when they’re modified ultimately, similar to cropping or making different visible edits. As beforehand talked about, CSAM detection solely features if iCloud Photos is enabled. What’s notable about Apple’s strategy is that quite than matching the photographs as soon as they’ve been despatched to the cloud — as most cloud platforms do — Apple has moved that course of to customers’ gadgets.

Apple

Here’s the way it works: Hashes of the recognized CSAM are saved on the system, and on-device pictures are in comparison with these hashes. The iOS system then generates an encrypted “safety voucher” that’s despatched to iCloud together with the picture. If a tool reaches a sure threshold of CSAM, Apple can decrypt the protection vouchers and conduct a guide evaluation of these photos. Apple isn’t saying what the edge is, however has made clear a single picture wouldn’t end in any motion.

Apple additionally revealed an in depth technical rationalization of the method .

Why is that this so controversial?

Privacy advocates and safety researchers have raised quite a few considerations. One os these is that this appears like a serious reversal for Apple, which 5 years in the past refused the FBI’s request to unlock a cellphone and has put up stating “what happens on your iPhone stays on your iPhone.” To many, the truth that Apple created a system that may proactively verify your photos for unlawful materials and refer them to legislation enforcement, appears like a betrayal of that promise.

In an announcement, the Electronic Frontier Foundation “a shocking about-face for users who have relied on the company’s leadership in privacy and security.” Likewise, Facebook — which has spent years taking warmth from Apple over its privateness missteps — has taken concern with the iPhone maker’s strategy to CSAM. WhatsApp chief, Will Cathcart, as “an Apple built and operated surveillance system.”

More particularly, there are actual considerations that after such a system is created, Apple could possibly be pressured — both by legislation enforcement or governments — to search for different varieties of materials. While CSAM detection is simply going to be within the US to begin, Apple has urged it may finally develop to different nations and work with different organizations. It’s not tough to think about eventualities the place Apple could possibly be pressured to begin in search of different varieties of content material that’s unlawful in some nations. The firm’s concessions in China — the place Apple reportedly of its information facilities to the Chinese authorities — are cited as proof that the corporate isn’t resistant to the calls for of less-democratic governments.

There are different questions too. Like whether or not it is potential for somebody to abuse this course of by maliciously getting CSAM onto somebody’s system as a way to set off them shedding entry to their iCloud account. Or whether or not there could possibly be a false optimistic, or another situation that ends in somebody being incorrectly flagged by the corporate’s algorithms.

What does Apple say about this?

Apple has strongly denied that it’s degrading privateness or strolling again its earlier commitments. The firm revealed a second doc by which it many of those claims.

On the problem of false positives, Apple has repeatedly emphasised that it’s only evaluating customers’ pictures towards a group of recognized baby exploitation materials, so photos of, say, your personal kids gained’t set off a report. Additionally, Apple has mentioned that the percentages of a false optimistic is round one in a trillion while you consider the truth that a sure variety of photos should be detected as a way to even set off a evaluation. Crucially, although, Apple is mainly saying we simply should take their phrase on that. As Facebook’s former safety chief Alex Stamos and safety researcher Matthew Green wrote in a joint New York Times op-ed, Apple hasn’t supplied exterior researchers with a lot visibility into how all this .

Apple says that its guide evaluation, which depends on human reviewers, would be capable of detect if CSAM was on a tool as the results of some type of malicious assault.

When it involves strain from governments or legislation enforcement companies, the corporate has mainly mentioned that it will refuse to cooperate with such requests. “We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands,” it writes. “We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it.” Although, as soon as once more, we type of simply should take Apple at its phrase right here.

If it’s so controversial, why is Apple doing it?

The brief reply is as a result of the corporate thinks that is discovering the proper steadiness between rising baby security and defending privateness. CSAM is unlawful and, within the US, firms are obligated to report it once they discover it. As a end result, CSAM detection options have been baked into in style companies for years. But not like different firms, Apple hasn’t checked for CSAM in customers’ pictures, largely resulting from its stance on privateness. Unsurprisingly, this has been a serious supply of frustration for baby security organizations and legislation enforcement.

To put this in perspective, in 2019 Facebook reported 65 million situations of CSAM on its platform, The New York Times. Google reported 3.5 million pictures and movies, whereas Twitter and Snap reported “more than 100,000,” Apple, however, reported 3,000 pictures.

That’s not as a result of baby predators don’t use Apple companies, however as a result of Apple hasn’t been almost as aggressive as another platforms in in search of this materials, and its privateness options have made it tough to take action. What’s modified now could be that Apple says it’s give you a technical technique of detecting collections of recognized CSAM in iCloud Photos libraries that also respects customers’ privateness. Obviously, there’s loads of disagreement over the main points and whether or not any type of detection system can actually be “private.” But Apple has calculated that the tradeoff is value it. “If you’re storing a collection of CSAM material, yes, this is bad for you,” Apple’s head of privateness The New York Times. “But for the rest of you, this is no different.”

All merchandise beneficial by Engadget are chosen by our editorial staff, unbiased of our mother or father firm. Some of our tales embody affiliate hyperlinks. If you purchase one thing by one among these hyperlinks, we might earn an affiliate fee.

#Apples #baby #security #updates #controversial #Engadget