For people, figuring out objects in a scene — whether or not that’s an avocado or an Aventador, a pile of mashed potatoes or an alien mothership — is so simple as taking a look at them. But for synthetic intelligence and laptop imaginative and prescient programs, creating a high-fidelity understanding of their environment takes a bit extra effort. Well, much more effort. Around 800 hours of hand-labeling coaching photos effort, if we’re being particular. To assist machines higher see the way in which folks do, a crew of researchers at MIT CSAIL in collaboration with Cornell University and Microsoft have developed STEGO, an algorithm capable of determine photos right down to the person pixel.

MIT CSAIL

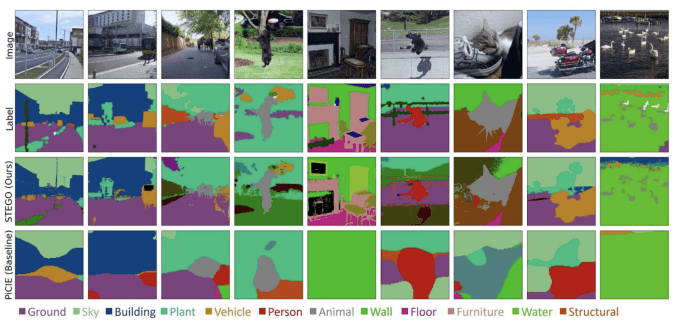

Normally, creating CV coaching knowledge entails a human drawing containers round particular objects inside a picture — say, a field across the canine sitting in a subject of grass — and labeling these containers with what’s inside (“dog”), in order that the AI educated on will probably be capable of inform the canine from the grass. STEGO (Self-supervised Transformer with Energy-based Graph Optimization), conversely, makes use of a method often called semantic segmentation, which applies a category label to every pixel within the picture to present the AI a extra correct view of the world round it.

Whereas a labeled field would have the thing plus different objects within the surrounding pixels inside the boxed-in boundary, semantic segmentation labels each pixel within the object, however solely the pixels that comprise the thing — you get simply canine pixels, not canine pixels plus some grass too. It’s the machine studying equal of utilizing the Smart Lasso in Photoshop versus the Rectangular Marquee software.

The drawback with this system is one in all scope. Conventional multi-shot supervised programs typically demand hundreds, if not tons of of hundreds, of labeled photos with which to coach the algorithm. Multiply that by the 65,536 particular person pixels that make up even a single 256×256 picture, all of which now should be individually labeled as nicely, and the workload required shortly spirals into impossibility.

Instead, “STEGO looks for similar objects that appear throughout a dataset,” the CSAIL crew wrote in a press launch Thursday. “It then associates these similar objects together to construct a consistent view of the world across all of the images it learns from.”

“If you’re looking at oncological scans, the surface of planets, or high-resolution biological images, it’s hard to know what objects to look for without expert knowledge. In emerging domains, sometimes even human experts don’t know what the right objects should be,” MIT CSAIL PhD pupil, Microsoft Software Engineer, and the paper’s lead author Mark Hamilton mentioned. “In these types of situations where you want to design a method to operate at the boundaries of science, you can’t rely on humans to figure it out before machines do.”

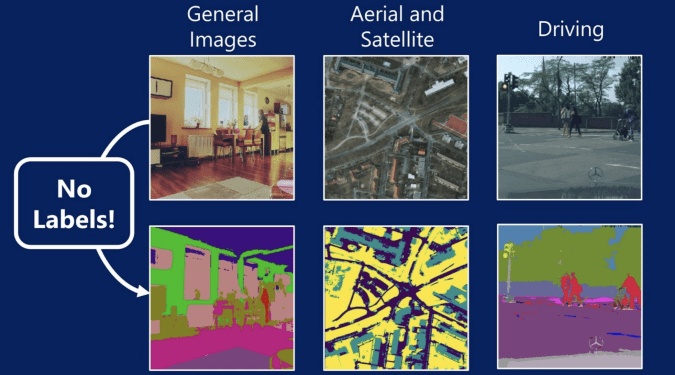

Trained on all kinds of picture domains — from house interiors to excessive altitude aerial pictures — STEGO doubled the efficiency of earlier semantic segmentation schemes, intently aligning with the picture value determinations of the human management. What’s extra, “when applied to driverless car datasets, STEGO successfully segmented out roads, people, and street signs with much higher resolution and granularity than previous systems. On images from space, the system broke down every single square foot of the surface of the Earth into roads, vegetation, and buildings,” the MIT CSAIL crew wrote.

MIT CSAIL

“In making a general tool for understanding potentially complicated data sets, we hope that this type of an algorithm can automate the scientific process of object discovery from images,” Hamilton mentioned. “There’s a lot of different domains where human labeling would be prohibitively expensive, or humans simply don’t even know the specific structure, like in certain biological and astrophysical domains. We hope that future work enables application to a very broad scope of data sets. Since you don’t need any human labels, we can now start to apply ML tools more broadly.”

Despite its superior efficiency to the programs that got here earlier than it, STEGO does have limitations. For instance, it will possibly determine each pasta and grits as “food-stuffs” however would not differentiate between them very nicely. It additionally will get confused by nonsensical photos, reminiscent of a banana sitting on a telephone receiver. Is this a food-stuff? Is this a pigeon? STEGO can’t inform. The crew hopes to construct a bit extra flexibility into future iterations, permitting the system to determine objects beneath a number of courses.

All merchandise really helpful by Engadget are chosen by our editorial crew, unbiased of our father or mother firm. Some of our tales embrace affiliate hyperlinks. If you purchase one thing by one in all these hyperlinks, we could earn an affiliate fee.

#MITs #latest #laptop #imaginative and prescient #algorithm #identifies #photos #pixel #Engadget