Last week, Apple introduced a series of new features focused at little one security on its gadgets. Though not reside but, the options will arrive later this 12 months for customers. Though the targets of those options are universally accepted to be good ones — the safety of minors and the restrict of the unfold of Child Sexual Abuse Material (CSAM), there have been some questions concerning the strategies Apple is utilizing.

I spoke to Erik Neuenschwander, Head of Privacy at Apple, concerning the new options launching for its gadgets. He shared detailed solutions to most of the considerations that folks have concerning the options and talked at size to a number of the tactical and strategic points that might come up as soon as this method rolls out.

I additionally requested concerning the rollout of the options, which come carefully intertwined however are actually fully separate techniques which have related targets. To be particular, Apple is saying three various things right here, a few of that are being confused with each other in protection and within the minds of the general public.

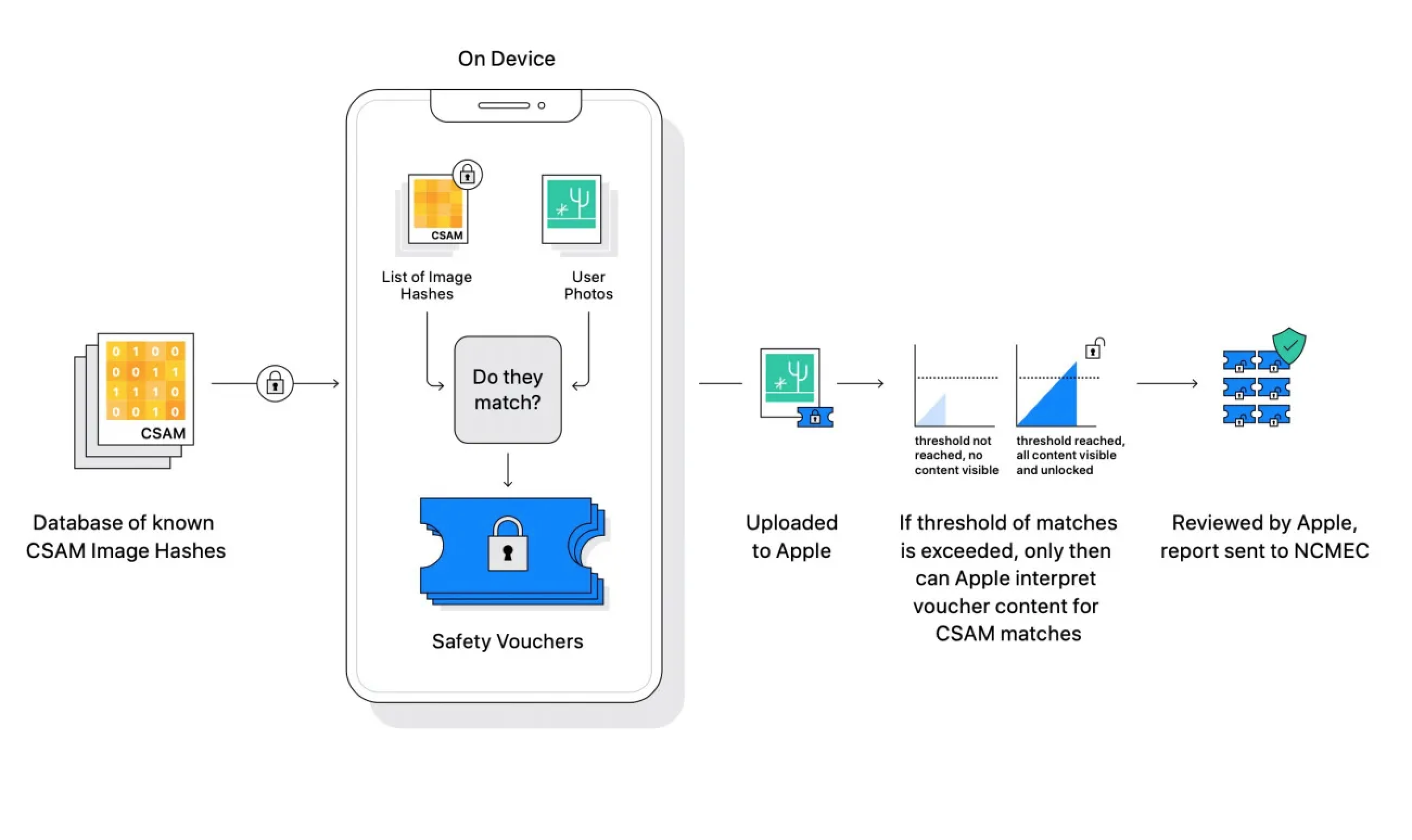

CSAM detection in iCloud Photos – A detection system referred to as NeuralHash creates identifiers it will possibly examine with IDs from the National Center for Missing and Exploited Children and different entities to detect identified CSAM content material in iCloud Photo libraries. Most cloud suppliers already scan person libraries for this info — Apple’s system is completely different in that it does the matching on gadget moderately than within the cloud.

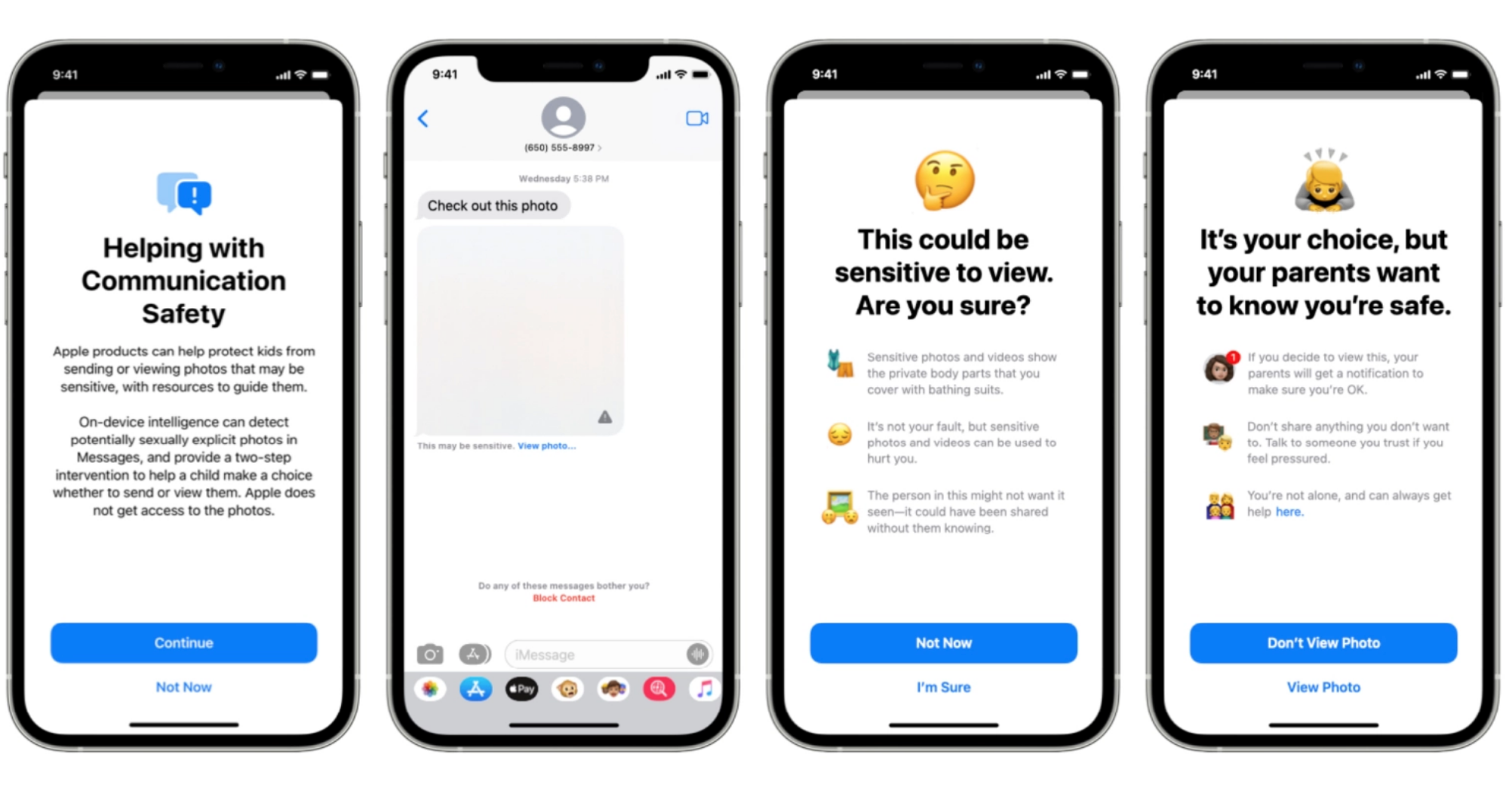

Communication Safety in Messages – A function {that a} mum or dad opts to activate for a minor on their iCloud Family account. It will alert kids when a picture they will view has been detected to be express and it tells them that it’ll additionally alert the mum or dad.

Interventions in Siri and search – A function that may intervene when a person tries to seek for CSAM-related phrases by Siri and search and can inform the person of the intervention and supply sources.

For extra on all of those options you’ll be able to learn our articles linked above or Apple’s new FAQ that it posted this weekend.

From private expertise, I do know that there are individuals who don’t perceive the distinction between these first two techniques, or assume that there can be some chance that they might come below scrutiny for harmless footage of their very own kids that will set off some filter. It’s led to confusion in what’s already a posh rollout of bulletins. These two techniques are fully separate, in fact, with CSAM detection searching for exact matches with content material that’s already identified to organizations to be abuse imagery. Communication Safety in Messages takes place fully on the gadget and stories nothing externally — it’s simply there to flag to a baby that they’re or could possibly be about to be viewing express pictures. This function is opt-in by the mum or dad and clear to each mum or dad and little one that it’s enabled.

There have additionally been questions concerning the on-device hashing of pictures to create identifiers that may be in contrast with the database. Though NeuralHash is a know-how that can be utilized for different kinds of options like quicker search in pictures, it’s not presently used for anything on iPhone apart from CSAM detection. When iCloud Photos is disabled, the function stops working fully. This presents an opt-out for folks however at an admittedly steep price given the comfort and integration of iCloud Photos with Apple’s working techniques.

Though this interview gained’t reply each attainable query associated to those new options, that is essentially the most in depth on-the-record dialogue by Apple’s senior privateness member. It appears clear from Apple’s willingness to supply entry and its ongoing FAQ’s and press briefings (there have been at the very least 3 up to now and certain many extra to come back) that it feels that it has a very good answer right here.

Despite the considerations and resistance, it appears as whether it is prepared to take as a lot time as is important to persuade everybody of that.

This interview has been evenly edited for readability.

TC: Most different cloud suppliers have been scanning for CSAM for a while now. Apple has not. Obviously there aren’t any present laws that say that you will need to search it out in your servers, however there’s some roiling regulation within the EU and different nations. Is that the impetus for this? Basically, why now?

Erik Neuenschwander: Why now comes all the way down to the truth that we’ve now received the know-how that may steadiness sturdy little one security and person privateness. This is an space we’ve been taking a look at for a while, together with present cutting-edge strategies which principally entails scanning by whole contents of customers libraries on cloud companies that — as you level out — isn’t one thing that we’ve ever executed; to look by person’s iCloud Photos. This system doesn’t change that both, it neither appears by information on the gadget, nor does it look by all pictures in iCloud Photos. Instead what it does is offers us a brand new skill to determine accounts that are beginning collections of identified CSAM.

So the event of this new CSAM detection know-how is the watershed that makes now the time to launch this. And Apple feels that it will possibly do it in a means that it feels comfy with and that’s ‘good’ in your customers?

That’s precisely proper. We have two co-equal targets right here. One is to enhance little one security on the platform and the second is to protect person privateness, And what we’ve been capable of do throughout all three of the options, is carry collectively applied sciences that permit us ship on each of these targets.

Announcing the Communications security in Messages options and the CSAM detection in iCloud Photos system on the identical time appears to have created confusion about their capabilities and targets. Was it a good suggestion to announce them concurrently? And why had been they introduced concurrently, if they’re separate techniques?

Well, whereas they’re [two] techniques they’re additionally of a bit together with our elevated interventions that can be coming in Siri and search. As necessary as it’s to determine collections of identified CSAM the place they’re saved in Apple’s iCloud Photos service, It’s additionally necessary to attempt to get upstream of that already horrible scenario. So CSAM detection implies that there’s already identified CSAM that has been by the reporting course of, and is being shared broadly re-victimizing kids on prime of the abuse that needed to occur to create that materials within the first place. for the creator of that materials within the first place. And so to try this, I feel is a vital step, however it is usually necessary to do issues to intervene earlier on when persons are starting to enter into this problematic and dangerous space, or if there are already abusers making an attempt to groom or to carry kids into conditions the place abuse can happen, and Communication Safety in Messages and our interventions in Siri and search really strike at these components of the method. So we’re actually making an attempt to disrupt the cycles that result in CSAM that then in the end may get detected by our system.

Governments and companies worldwide are consistently pressuring all giant organizations which have any type of end-to-end and even partial encryption enabled for his or her customers. They typically lean on CSAM and attainable terrorism actions as rationale to argue for backdoors or encryption defeat measures. Is launching the function and this functionality with on-device hash matching an effort to stave off these requests and say, look, we are able to give you the knowledge that you just require to trace down and stop CSAM exercise — however with out compromising a person’s privateness?

So, first, you talked concerning the gadget matching so I simply wish to underscore that the system as designed doesn’t reveal — in the best way that folks may historically consider a match — the results of the match to the gadget or, even when you think about the vouchers that the gadget creates, to Apple. Apple is unable to course of particular person vouchers; as a substitute, all of the properties of our system imply that it’s solely as soon as an account has gathered a group of vouchers related to unlawful, identified CSAM pictures that we’re capable of study something concerning the person’s account.

Now, why to do it’s as a result of, as you mentioned, that is one thing that may present that detection functionality whereas preserving person privateness. We’re motivated by the necessity to do extra for little one security throughout the digital ecosystem, and all three of our options, I feel, take very optimistic steps in that route. At the identical time we’re going to depart privateness undisturbed for everybody not engaged within the criminal activity.

Does this, making a framework to permit scanning and matching of on-device content material, create a framework for outdoor regulation enforcement to counter with, ‘we can give you a list, we don’t wish to have a look at all the person’s information however we can provide you an inventory of content material that we’d such as you to match’. And when you can match it with this content material you’ll be able to match it with different content material we wish to seek for. How does it not undermine Apple’s present place of ‘hey, we can’t decrypt the person’s gadget, it’s encrypted, we don’t maintain the important thing?’

It doesn’t change that one iota. The gadget continues to be encrypted, we nonetheless don’t maintain the important thing, and the system is designed to operate on on-device information. What we’ve designed has a tool facet part — and it has the gadget facet part by the best way, for privateness enhancements. The different of simply processing by going by and making an attempt to guage customers information on a server is definitely extra amenable to adjustments [without user knowledge], and fewer protecting of person privateness.

Our system entails each an on-device part the place the voucher is created, however nothing is discovered, and a server-side part, which is the place that voucher is shipped together with information coming to Apple service and processed throughout the account to study if there are collections of unlawful CSAM. That implies that it’s a service function. I perceive that it’s a posh attribute {that a} function of the service has a portion the place the voucher is generated on the gadget, however once more, nothing’s discovered concerning the content material on the gadget. The voucher technology is definitely precisely what allows us to not have to start processing all customers’ content material on our servers which we’ve by no means executed for iCloud Photos. It’s these types of techniques that I feel are extra troubling with regards to the privateness properties — or how they could possibly be modified with none person perception or information to do issues aside from what they had been designed to do.

One of the larger queries about this method is that Apple has mentioned that it’ll simply refuse motion whether it is requested by a authorities or different company to compromise by including issues that aren’t CSAM to the database to verify for them on-device. There are some examples the place Apple has needed to adjust to native regulation on the highest ranges if it desires to function there, China being an instance. So how will we belief that Apple goes to hew to this rejection of interference If pressured or requested by a authorities to compromise the system?

Well first, that’s launching just for US, iCloud accounts, and so the hypotheticals appear to carry up generic nations or different nations that aren’t the US once they converse in that means, and the subsequently it appears to be the case that folks agree US regulation doesn’t supply these sorts of capabilities to our authorities.

But even within the case the place we’re speaking about some try to alter the system, it has various protections inbuilt that make it not very helpful for making an attempt to determine people holding particularly objectionable pictures. The hash checklist is constructed into the working system, we’ve one international working system and don’t have the power to focus on updates to particular person customers and so hash lists can be shared by all customers when the system is enabled. And secondly, the system requires the brink of pictures to be exceeded so making an attempt to hunt out even a single picture from an individual’s gadget or set of individuals’s gadgets gained’t work as a result of the system merely doesn’t present any information to Apple for single pictures saved in our service. And then, thirdly, the system has constructed into it a stage of guide evaluation the place, if an account is flagged with a group of unlawful CSAM materials, an Apple workforce will evaluation that to guarantee that it’s a appropriate match of unlawful CSAM materials prior to creating any referral to any exterior entity. And so the hypothetical requires leaping over a variety of hoops, together with having Apple change its inner course of to refer materials that’s not unlawful, like identified CSAM and that we don’t consider that there’s a foundation on which individuals will have the ability to make that request within the US. And the final level that I’d simply add is that it does nonetheless protect person selection, if a person doesn’t like this type of performance, they’ll select to not use iCloud Photos and if iCloud Photos is just not enabled no a part of the system is practical.

So if iCloud Photos is disabled, the system doesn’t work, which is the general public language in the FAQ. I simply needed to ask particularly, whenever you disable iCloud Photos, does this method proceed to create hashes of your pictures on gadget, or is it fully inactive at that time?

If customers should not utilizing iCloud Photos, NeuralHash won’t run and won’t generate any vouchers. CSAM detection is a neural hash being in contrast in opposition to a database of the identified CSAM hashes which might be a part of the working system picture. None of that piece, nor any of the extra components together with the creation of the protection vouchers or the importing of vouchers to iCloud Photos is functioning when you’re not utilizing iCloud Photos.

In latest years, Apple has typically leaned into the truth that on-device processing preserves person privateness. And in practically each earlier case and I can consider that’s true. Scanning pictures to determine their content material and permit me to go looking them, as an illustration. I’d moderately that be executed regionally and by no means despatched to a server. However, on this case, it looks as if there may very well be a type of anti-effect in that you just’re scanning regionally, however for exterior use circumstances, moderately than scanning for private use — making a ‘less trust’ situation within the minds of some customers. Add to this that each different cloud supplier scans it on their servers and the query turns into why ought to this implementation being completely different from most others engender extra belief within the person moderately than much less?

I feel we’re elevating the bar, in comparison with the business normal means to do that. Any type of server facet algorithm that’s processing all customers pictures is placing that information at extra danger of disclosure and is, by definition, much less clear by way of what it’s doing on prime of the person’s library. So, by constructing this into our working system, we acquire the identical properties that the integrity of the working system offers already throughout so many different options, the one international working system that’s the identical for all customers who obtain it and set up it, and so it in a single property is rather more difficult, even how it might be focused to a person person. On the server facet that’s really fairly simple — trivial. To have the ability to have a number of the properties and constructing it into the gadget and guaranteeing it’s the identical for all customers with the options allow give a powerful privateness property.

Secondly, you level out how use of on gadget know-how is privateness preserving, and on this case, that’s a illustration that I’d make to you, once more. That it’s actually the choice to the place customers’ libraries should be processed on a server that’s much less personal.

The issues that we are able to say with this method is that it leaves privateness fully undisturbed for each different person who’s not into this unlawful conduct, Apple acquire no extra information about any customers cloud library. No person’s iCloud Library needs to be processed because of this function. Instead what we’re capable of do is to create these cryptographic security vouchers. They have mathematical properties that say, Apple will solely have the ability to decrypt the contents or study something concerning the pictures and customers particularly that acquire pictures that match unlawful, identified CSAM hashes, and that’s simply not one thing anybody can say a few cloud processing scanning service, the place each single picture needs to be processed in a transparent decrypted type and run by routine to find out who is aware of what? At that time it’s very simple to find out something you need [about a user’s images] versus our system solely what is set to be these pictures that match a set of identified CSAM hashes that got here straight from NCMEC and and different little one security organizations.

Can this CSAM detection function keep holistic when the gadget is bodily compromised? Sometimes cryptography will get bypassed regionally, any individual has the gadget in hand — are there any extra layers there?

I feel it’s necessary to underscore how very difficult and costly and uncommon that is. It’s not a sensible concern for many customers although it’s one we take very significantly, as a result of the safety of information on the gadget is paramount for us. And so if we have interaction within the hypothetical the place we are saying that there was an assault on somebody’s gadget: that’s such a robust assault that there are a lot of issues that that attacker may try and do to that person. There’s a variety of a person’s information that they might probably get entry to. And the concept essentially the most invaluable factor that an attacker — who’s undergone such a particularly troublesome motion as breaching somebody’s gadget — was that they might wish to set off a guide evaluation of an account doesn’t make a lot sense.

Because, let’s keep in mind, even when the brink is met, and we’ve some vouchers which might be decrypted by Apple. The subsequent stage is a guide evaluation to find out if that account must be referred to NCMEC or not, and that’s one thing that we wish to solely happen in circumstances the place it’s a authentic excessive worth report. We’ve designed the system in that means, but when we think about the assault situation you introduced up, I feel that’s not a really compelling final result to an attacker.

Why is there a threshold of pictures for reporting, isn’t one piece of CSAM content material too many?

We wish to be certain that the stories that we make to NCMEC are excessive worth and actionable, and one of many notions of all techniques is that there’s some uncertainty inbuilt as to whether or not that picture matched, And so the brink permits us to succeed in that time the place we anticipate a false reporting price for evaluation of 1 in 1 trillion accounts per 12 months. So, working in opposition to the concept we would not have any curiosity in trying by customers’ photograph libraries exterior these which might be holding collections of identified CSAM the brink permits us to have excessive confidence that these accounts that we evaluation are ones that once we discuss with NCMEC, regulation enforcement will have the ability to take up and successfully examine, prosecute and convict.

#Interview #Apples #Privacy #particulars #little one #abuse #detection #Messages #security #options #TechCrunch