When it involves on-line video games, everyone knows the “report” button doesn’t do something. Regardless of style, writer or price range, video games launch on daily basis with ineffective programs for reporting abusive gamers, and a few of the largest titles on the planet exist in a relentless state of apology for harboring poisonous environments. Franchises together with League of Legends, Call of Duty, Counter-Strike, Dota 2, Overwatch, Ark and Valorant have such hostile communities that this repute is a part of their manufacturers — suggesting these titles to new gamers features a warning in regards to the vitriol they’ll expertise in chat.

It feels just like the report button typically sends complaints immediately right into a trash can, which is then set on fireplace quarterly by the one-person moderation division. According to legendary Quake and Doom esports professional Dennis Fong (higher often known as Thresh), that’s not removed from the reality at many AAA studios.

“I’m not gonna name names, but some of the biggest games in the world were like, you know, honestly it does go nowhere,” Fong mentioned. “It goes to an inbox that no one looks at. You feel that as a gamer, right? You feel despondent because you’re like, I’ve reported the same guy 15 times and nothing’s happened.”

Game builders and publishers have had many years to determine find out how to fight participant toxicity on their very own, however they nonetheless haven’t. So, Fong did.

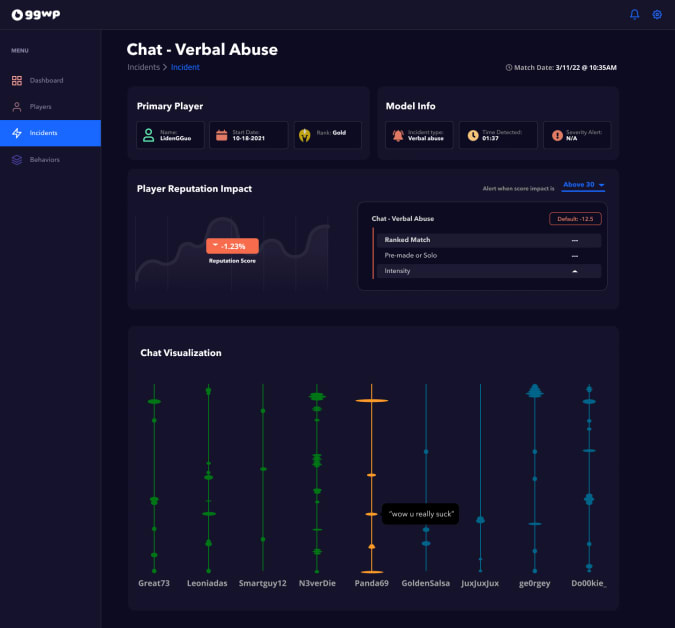

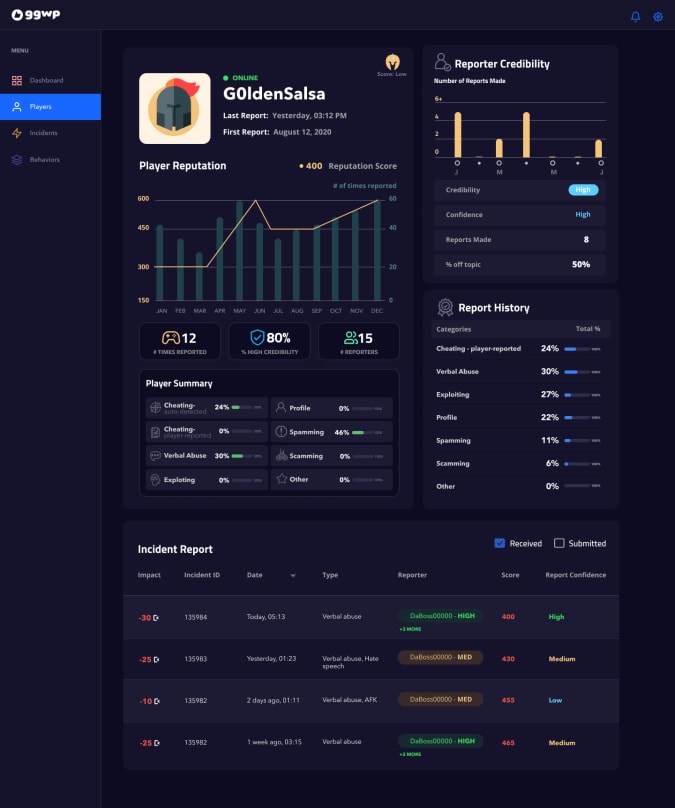

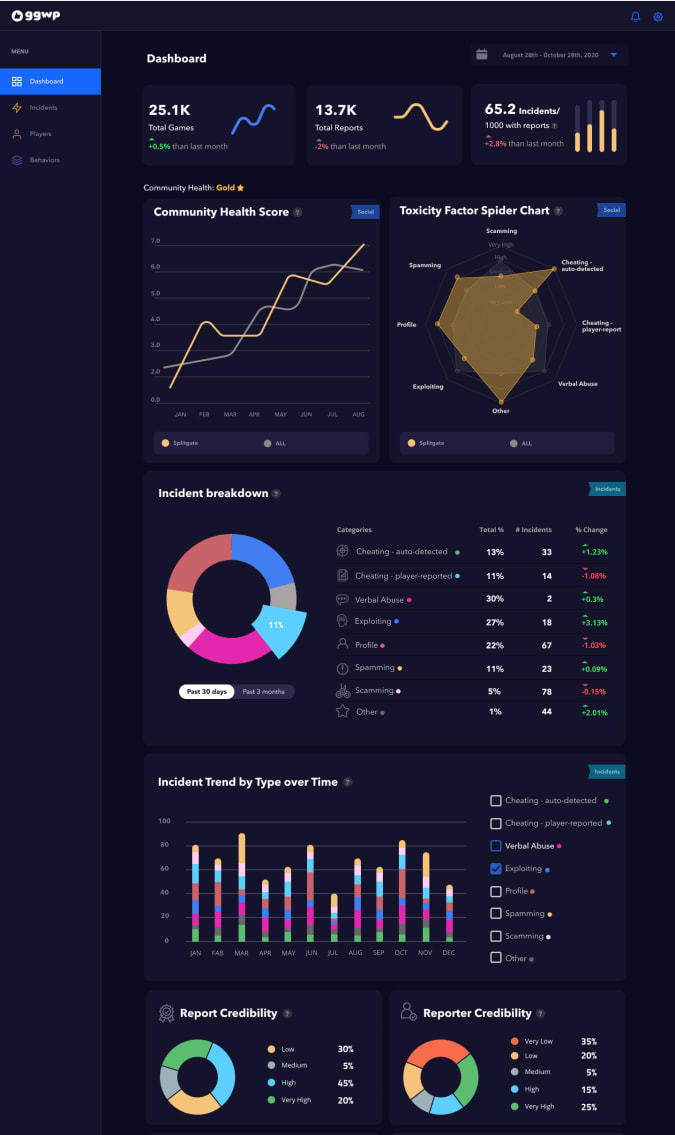

This week he introduced GGWP, an AI-powered system that collects and organizes player-behavior information in any recreation, permitting builders to handle each incoming report with a mixture of automated responses and real-person critiques. Once it’s launched to a recreation — “Literally it’s like a line of code,” Fong mentioned — the GGWP API aggregates participant information to generate a neighborhood well being rating and break down the forms of toxicity widespread to that title. After all, each recreation is a gross snowflake in terms of in-chat abuse.

GGWP

The system can even assign repute scores to particular person gamers, based mostly on an AI-led evaluation of reported matches and a posh understanding of every recreation’s tradition. Developers can then assign responses to sure repute scores and even particular behaviors, warning gamers a couple of dip of their scores or simply breaking out the ban hammer. The system is totally customizable, permitting a title like Call of Duty: Warzone to have completely different guidelines than, say, Roblox.

“We very quickly realized that, first of all, a lot of these reports are the same,” Fong mentioned. “And because of that, you can actually use big data and artificial intelligence in ways to help triage this stuff. The vast majority of this stuff is actually almost perfectly primed for AI to go tackle this problem. And it’s just people just haven’t gotten around to it yet.”

GGWP is the brainchild of Fong, Crunchyroll founder Kun Gao, and information and AI professional Dr. George Ng. It’s thus far secured $12 million in seed funding, backed by Sony Innovation Fund, Riot Games, YouTube founder Steve Chen, the streamer Pokimane, and Twitch creators Emmett Shear and Kevin Lin, amongst different buyers.

GGWP

Fong and his cohorts began constructing GGWP greater than a yr in the past, and given their ties to the business, they had been capable of sit down with AAA studio executives and ask why moderation was such a persistent concern. The downside, they found, was twofold: First, these studios didn’t see toxicity as an issue they created, so that they weren’t taking accountability for it (we will name this the Zuckerberg Special). And second, there was merely an excessive amount of abuse to handle.

In only one yr, one main recreation obtained greater than 200 million player-submitted experiences, Fong mentioned. Several different studio heads he spoke with shared figures within the 9 digits as effectively, with gamers producing lots of of thousands and thousands of experiences yearly per title. And the issue was even bigger than that.

“If you’re getting 200 million for one game of players reporting each other, the scale of the problem is so monumentally large,” Fong mentioned. “Because as we just talked about, people have given up because it doesn’t go anywhere. They just stop reporting people.”

Executives informed Fong they merely couldn’t rent sufficient individuals to maintain up. What’s extra, they typically weren’t considering forming a group simply to craft an automatic answer — if that they had AI individuals on workers, they wished them constructing the sport, not a moderation system.

In the tip, most AAA studios ended up coping with about 0.1 % of the experiences they obtained annually, and their moderation groups tended to be laughably small, Fong found.

GGWP

“Some of the biggest publishers in the world, their anti-toxicity player behavior teams are less than 10 people in total,” Fong mentioned. “Our team is 35. It’s 35 and it’s all product and engineering and data scientists. So we as a team are larger than almost every global publisher’s team, which is kind of sad. We are very much devoted and committed to trying to help solve this problem.”

Fong desires GGWP to introduce a brand new mind-set about moderation in video games, with a concentrate on implementing teachable moments, reasonably than straight punishment. The system is ready to acknowledge useful conduct like sharing weapons and reviving teammates underneath hostile circumstances, and may apply bonuses to that participant’s repute rating in response. It would additionally permit builders to implement real-time in-game notifications, like an alert that claims, “you’ve lost 3 reputation points” when a participant makes use of an unacceptable phrase. This would hopefully dissuade them from saying the phrase once more, reducing the variety of total experiences for that recreation, Fong mentioned. A studio must do some further work to implement such a notification system, however GGWP can deal with it, in response to Fong.

“We’ve completely modernized the approach to moderation,” he mentioned. “They just have to be willing to give it a try.”

All merchandise really helpful by Engadget are chosen by our editorial group, unbiased of our mum or dad firm. Some of our tales embody affiliate hyperlinks. If you purchase one thing by one in all these hyperlinks, we could earn an affiliate fee.

#GGWP #system #tracks #fights #ingame #toxicity #Engadget